By Sally C. Morton, PhD

Professor and Chair, Department of Biostatistics University of Pittsburgh

Vice Chair, IOM Committee on Standards for Systematic Reviews of Comparative Effectiveness Research

Introduction

The PCORI standard that indirectly includes the IOM SR standards is RQ-1, which concerns identifying gaps in evidence. This standard requires that if an SR on gaps has not been conducted, researchers should perform one according to the PCORI standard SR-1, or give sufficient reason as to why an SR is not possible. It is important to note that all PCORI projects, regardless of purpose or design, must follow the RQ-1 standard, which demonstrates the wide impact the IOM SR report can have.

Influence of IOM Report

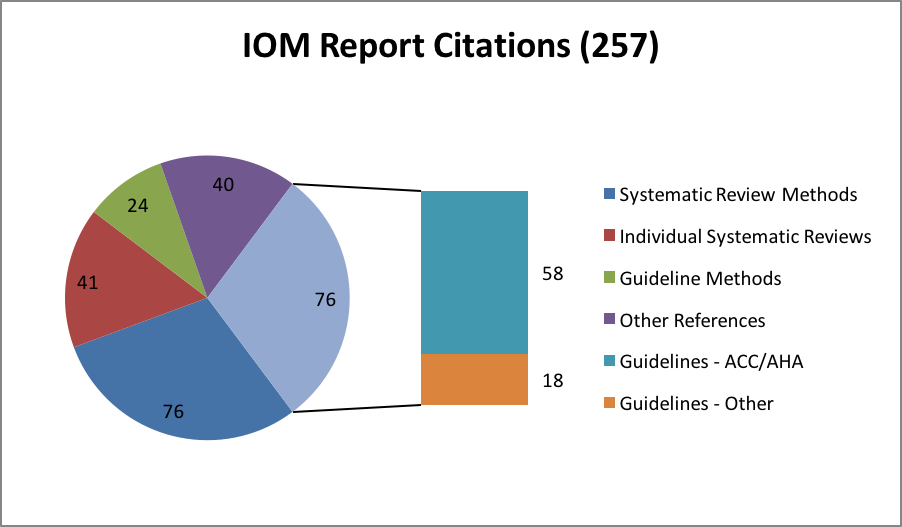

To further examine the influence of the IOM SR Report, a recent search[6] of Scopus and Web of Science was conducted, revealing 257 citations of the report since publication. Seventy-six (30%) of these references were on specific guidelines, and 58 of those were American College of Cardiology (ACC)/American Heart Association (AHA) guidelines, with a single guideline often repeated in different journals. The ACC/AHA, a leading producer of guidelines, updated the SR methodology in their guideline development process specifically to address the IOM SR standards. The remaining 18 of 76 guideline references were on individual guidelines by a wide array of institutions and concerning a variety of conditions.

Among the 76 references on SR methods is Chang et al.,[11] a response from the Agency for Healthcare Research and Quality (AHRQ) Evidence-Based Practice Center (EPC) Program to the IOM SR Report. These authors underscore the aspirational aspect of the report, and provide advice on how to meet the standards given limited time and resources. They also counsel flexibility and efficiency, which is echoed in the PCORI SR-1 adaption of the IOM standards, and additional references demonstrate the considerable contribution of the EPC Program to SR methods.[12] Of course, the Cochrane Collaboration, one of the expert guidance sources utilized in the IOM SR Report, is a leader in methodological contributions as well, and several of the 76 references on SR methods emanate from Cochrane collaborators or utilize Cochrane resources.

Conclusion

Several of the 76 references are focused on particular steps in an SR, foreshadowed by the IOM SR Report’s identification of open questions. Extremely topical methodological and procedural challenges such as automatic data extraction,[13] complex interventions,[14] language restriction,[15] and screening approaches[16] are addressed, though network meta-analysis,[17] which is very relevant for SRs of comparative effectiveness research, is not. Suffice to say that the report’s goal of setting expectations with accountability without stifling innovation has been realized. However, despite the positive influence of the IOM SR Report, I must quote my colleague Dr. Greenfield, whose Commentary[18] concerned progress since the IOM CPG report: “The stakes are high and much remains to be done. The public is counting on us all.” I agree completely.

References

- IOM (Institute of Medicine). 2011. Finding what works in health care: Standards for systematic reviews. Eden J, Levit L, Berg A, Morton S (editors). (“SR Report”) Available at http://www.nap.edu/catalog/13059/finding-what-works-in-health-care-standards-for-systematic-reviews. Accessed September 9, 2015.

- IOM (Institute of Medicine). 2011. Clinical practice guidelines we can trust. Graham R, Mancher M, Wolman DM, Greenfield S, Steinberg E (editors). (“CPG Report”). Available at http://www.nap.edu/catalog/13058/clinical-practice-guidelines-we-can-trust. Accessed September 9, 2015.

- IOM CPG Report 2011, page 4.

- IOM CPG Report 2011, page 7.

- PCORI (Patient-Centered Outcomes Research Institute) Methodology Committee. 2013. The PCORI Methodology Report. Available at http://www.pcori.org/research-results/research-methodology. Accessed September 9, 2015.

- Search conducted on September 3, 2015.

- IOM SR Report 2011, page 3

- IOM SR Report 2011, page 4.

- IOM SR Report, 2011, page 156.

- IOM SR Report, 2011, page 159.

- Chang SM, Bass EB, Berkman N, Carey TS, Kane RL, Lau J, Raticheck S. Challenges in implementing The Institute of Medicine systematic review standards. Systematic Reviews 2013;2:69.

- Chang SM. The Agency for Healthcare Research and Quality (AHRQ) Effective Health Care Program Methods Guide for comparative effectiveness reviews: Keeping up-to-date in a rapidly evolving field. JCE 2011;64:1166-1167.

- Jonnalagadda SR, Goyal P, Huffman MD. Automating data extraction in systematic reviews: A systematic review. Systematic Reviews 2015;4:78.

- Squires JE, Valentine JC, Grimshaw JM. Systematic reviews of complex interventions: Framing the review question. JCE 2013;66:1215-1222.

- Wang Z, Brito JP, Tsapas A, Griebeler ML, Alahdab D, Murad MH. Systematic reviews with language restrictions and no author contact have lower overall credibility: A methodology study. Clin Epi 2015;7:243-247.

- Mateen F, Oh J, Tergas AI, Nrhayani NH, Kamdar NN. Titles versus titles and abstracts for initial screening of articles for systematic reviews. Clin Epi 2013;5:89-95.

- Salanti G, Del Giovane C, Chaimani A, Caldwell DM, Higgins JPT. Evaluating the quality of evidence from a network meta-analysis. PLOS ONE 2014:9(7);e99682.

- Greenfield S. The Institute of Medicine Standards for Trustworthy Clinical Practice Guidelines: 4 Years Later. May 2015. Accessed September 9, 2015.