Assessing the Evidence Series

By Rebecca L Diekemper, MPH, Doctor Evidence, Santa Monica, CA,

Belinda K Ireland, MD, MS, TheEvidenceDoc, Pacific, MO,

and Liana R Merz, PhD, MPH, Center for Clinical Excellence, BJC HealthCare, Saint Louis, MO

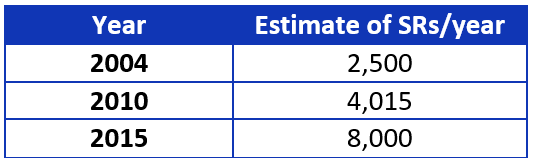

Systematic reviews (SRs) are essential for evidence-based guideline development. Why? Currently they are the most rigorous and least biased method we have to locate, evaluate, summarize, and translate existing evidence. The Institute of Medicine (IOM) and Guidelines International Network (G-I-N) “recommend” using SRs,1,2 while the National Guideline Clearinghouse (NGC) “requires” guidelines be based on SRs.3 Guideline developers who rely on existing SRs know they have increased in production, as is shown in Table 1.

Table 1. Yearly estimates of systematic review publication.4-6

Reviews of the past were often limited by biased selection of studies that were easy to find, supported the prevailing belief, or lacked quality assessment. These and other factors led to reviews with unreliable findings. Development standards now exist from the IOM, Agency for Healthcare Research and Quality (AHRQ), G-I-N, NGC, and others.1-3,7 There are PRISMA reporting standards, and tools for evaluating existing SRs based on those standards.8 But SR quality still varies.

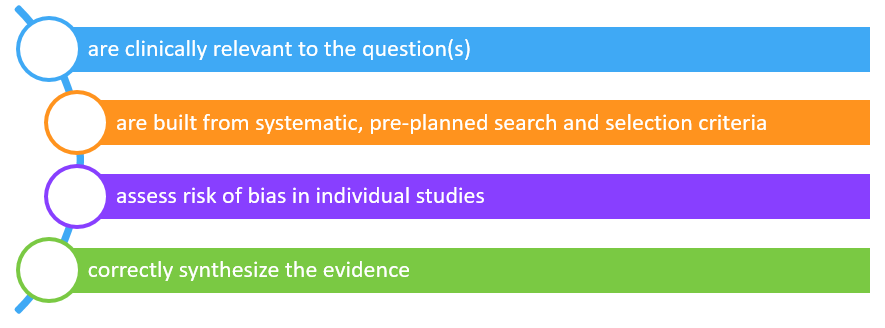

Before SRs can be used as the evidence base for a guideline, the SRs must be evaluated to ensure they:

Even though the Overview Quality Assessment Questionnaire (OQAQ) and the Assessment of Multiple Systematic Reviews (AMSTAR) include these concepts,9,10 we found these existing tools for evaluating systematic reviews did not meet all our needs.

Even though the Overview Quality Assessment Questionnaire (OQAQ) and the Assessment of Multiple Systematic Reviews (AMSTAR) include these concepts,9,10 we found these existing tools for evaluating systematic reviews did not meet all our needs.

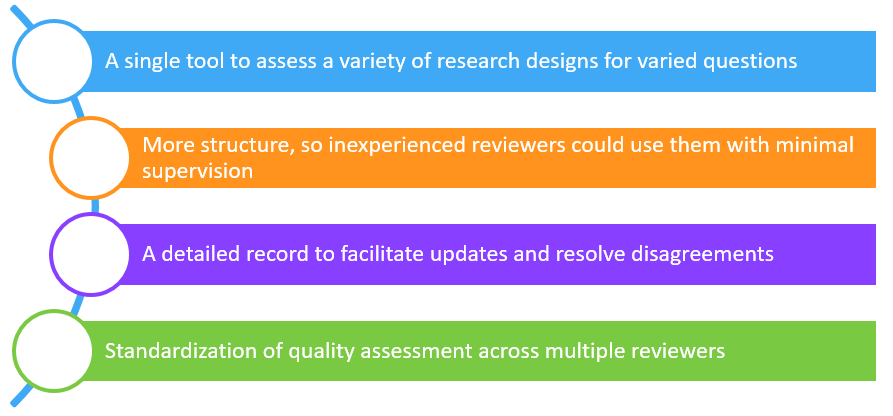

We also needed:

So, we set out to create a tool to meet those needs.

We started by looking at the Cochrane Handbook for Systematic Reviews of Interventions11 and the Sacks article on Meta-Analyses of Randomized-Controlled Trials.12 Ultimately, we identified 2 validated tools for assessing the quality of systematic reviews: OQAQ (or Overview Quality Assessment Questionnaire), developed by Oxman and Guyatt,9 and AMSTAR (or Assessment of Multiple Systematic Reviews) developed by Shea et al.10

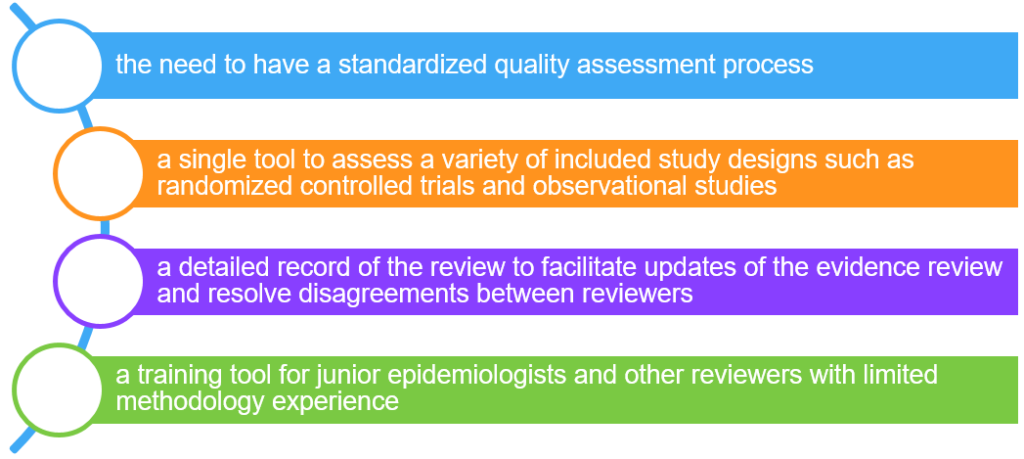

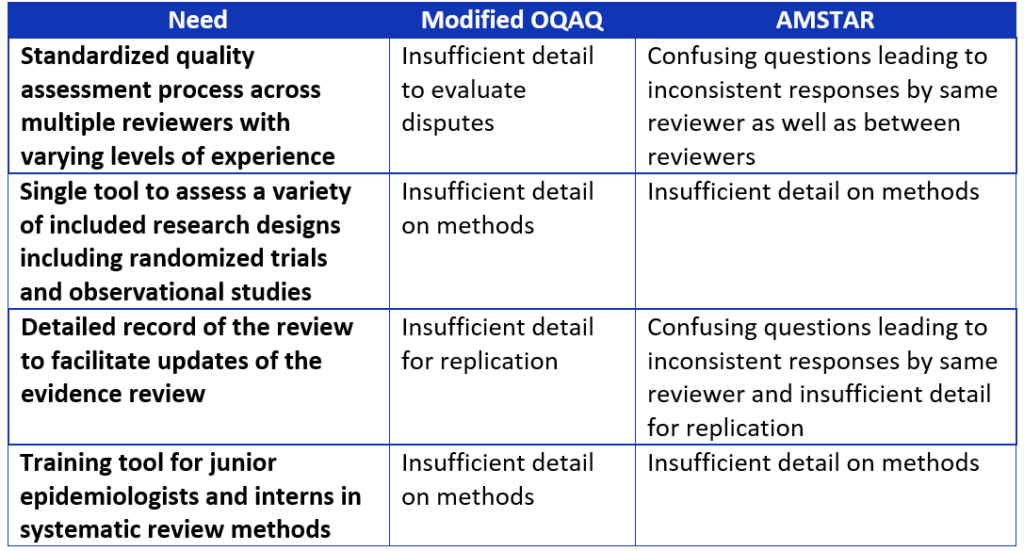

As we were determining the criteria that should be included in our tool, we identified several needs that were not being adequately addressed by the OQAQ and AMSTAR tools,9,10 including:

Table 2. Comparison of systematic review assessment tools.

When we were developing DART, we wanted to make sure that it could be used by anyone regardless of their background and experience in appraising systematic reviews. We wanted clinicians, guideline developers, researchers, students, and other users to be able to use our tool. With this goal in mind, we created comprehensive questions with answers that provide additional guidance to inexperienced reviewers. Another goal we had in mind was that we wanted to include space for documenting where the systematic review reported on an item being assessed. The additional space also allows the reviewer to explain how they arrived at their assessment of that particular item. DART evaluates how well a systematic review assesses studies with varying study designs. We needed to be able to summarize evidence for all types of clinical questions, not just intervention questions. Therefore, we needed a single tool to evaluate a variety of research designs, not just RCTs, but also observational studies. This is an important feature because more systematic reviews are including observational studies and are no longer limited to just randomized controlled trials. It is also important to assess whether studies included in a systematic review are synthesized appropriately. In cases where there is substantial heterogeneity, it is inappropriate to pool studies with noticeably different populations, interventions, outcomes, study designs, or treatment effects. Review authors need to state that they considered heterogeneity and how they accounted for it, if detected. DART also assesses whether systematic review authors disclosed any financial or intellectual conflicts of interest and whether any potential authors were disqualified from serving as authors on the systematic review due to their conflicts of interest.

Six methodologists participated in the first round of pilot testing the tool. We met weekly to discuss our evaluation of various systematic reviews using DART. Based on these discussions and evaluations, we revised DART for both clarity and consistency in responses, and agreement between reviewers.

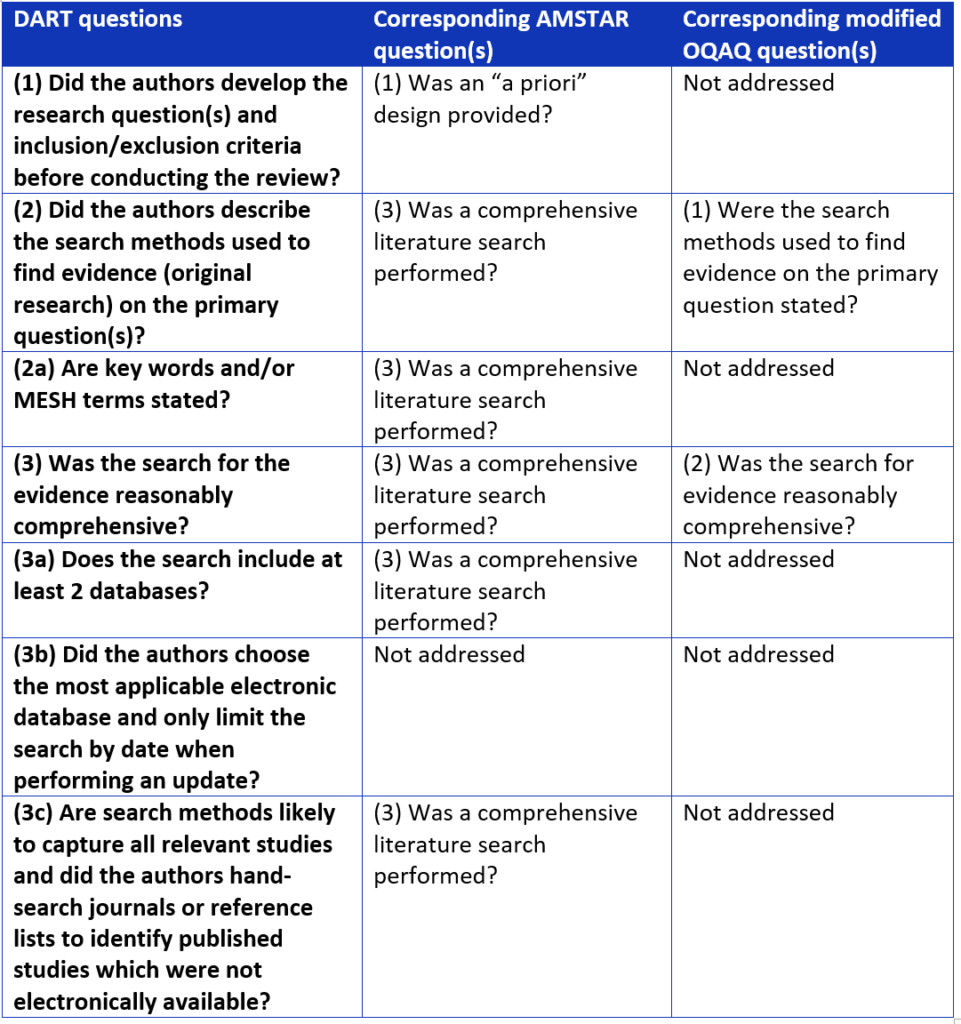

Next, 4 methodologists systematically compared DART to two validated review tools (OQAQ and AMSTAR).9,10 We used a nominal group technique, brainstorming strengths, weaknesses, and suggestions for the improvement of DART. We compared the performance of the 3 tools, identifying variation in reviewer responses. We also mapped out any overlapping questions between the 3 tools (see Table 3).

Table 3. Overlapping questions between systematic review assessment tools.

Based on these findings, we revised DART again, and performed another round of pilot testing to evaluate tool performance. Subsequently, we made additional revisions. We then compared this version of DART to the March 2011 Standards for Systematic Reviews from the Institute for Medicine1 and performed a final round of pilot testing. The final round of testing included assessment of both intra-observer and inter-observer reliability.

Based on these findings, we revised DART again, and performed another round of pilot testing to evaluate tool performance. Subsequently, we made additional revisions. We then compared this version of DART to the March 2011 Standards for Systematic Reviews from the Institute for Medicine1 and performed a final round of pilot testing. The final round of testing included assessment of both intra-observer and inter-observer reliability.

DART was published in the June 2015 edition of the World Journal of Meta-Analysis13 and is currently used for evaluating the quality of systematic reviews by the Evidence-Based Care team at BJC HealthCare, and as a part of guideline development for CHEST and TheEvidenceDoc.

We invite you to try out the DART tool and share your experience in the comments section of TheEvidenceDoc website. The Excel version of the DART tool and downloadable publication are available on the website.

References

- Eden J, Levit L, Berg A. Committee on Standards for Systematic Reviews of Comparative Effectiveness Research; Institute of Medicine. Finding What Works in Health Care: Standards for Systematic Reviews: Washington, DC: The National Academies Press, 2011.

- Qaseem A, Forland F, MacBeth F, et al. Guidelines International Network: Toward International Standards for Clinical Practice Guidelines. Ann Intern Med 2012;156:525-531.

- NGC National Guideline Clearinghouse Inclusion Criteria. Accessed May 9, 2016.

- Moher D, Tetzlaff J, Tricco AC, Sampson M, Altman DG. Epidemiology and reporting characteristics of systematic reviews. PLoS Med 2007;4:e78.

- Bastian H, Glasziou P, Chalmers I. Seventy-Five Trials and Eleven Systematic Reviews a Day: How Will We Ever Keep Up? PLoS Med 2010;7:e1000326.

- Page MJ Shamseer L, Altman DG et al. Epidemiology and reporting characteristics of systematic reviews: 2014 update. In Cochrane Colloquium Vienna, October 3-7, 2015, Vienna, Austria Accessed 5/12/16 at http://2015.colloquium.cochrane.org/abstracts/epidemiology-and-reporting-characteristics-systematic-reviews-2014-update

- Viswanathan M, Ansari MT, Berkman ND, et al. Assessing the Risk of Bias of Individual Studies in Systematic Reviews of Health Care Interventions. Agency for Healthcare Research and Quality Methods Guide for Comparative Effectiveness Reviews. March 2012. AHRQ Publication No. 12-EHC047-EF. Available at: www.effectivehealthcare.ahrq.gov/.

- Moher D, Liberati A, Tetzlaff J, et al. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. Ann Intern Med 2009;151:264-269.

- Oxman AD, Guyatt GH. Validation of an index of the quality of review articles. J Clin Epidemiol 1991;44(11):1271-8.

- Shea B, Grimshaw J, Wells G, et al. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol 2007;7:10.

- Higgins JPT, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions 4.2.6 [updated September 2006]. In: The Cochrane Library, Issue 4, 2006. Chichester, UK: John Wiley & Sons, Ltd.

- Sacks H, Berrier J, Reitman D, et al. Meta-Analyses of Randomized Controlled Trials. N Engl J Med 1987;316:450-455.

- Diekemper R, Ireland B, Merz L. Development of the Documentation and Appraisal Review Tool for systematic reviews. World J Metaanal 2015; 3(3):142-150.

About the authors:

Rebecca Diekemper received her Master’s training in epidemiology and biostatistics from Emory University’s Rollins School of Public Health. She has worked in the field of evidence-based medicine including systematic review and guideline development for more than eight years. At BJC HealthCare, Ms. Diekemper designed and conducted systematic reviews and consulted with system leadership on the evidence around quality measures and standards in BJC hospitals. Prior to joining Doctor Evidence, Ms. Diekemper was a guideline methodologist for the American College of Chest Physicians, where she managed the development of clinical practice guidelines. As a guideline methodologist, she led multi-disciplinary panels of clinical experts through the systematic review process and formulation of evidence-based recommendations and guideline drafting, as well as training and managing other guideline methodologists. In addition to serving as an author on guidelines and methodology articles, she has served as an instructor for guideline and systematic review workshops and has developed training modules and quality assessment tools, such as a tool for assessing the quality of systematic reviews.

Dr Ireland has over 35 years of experience and is respected as a national adviser on the application of evidence based methods to health care quality. She has served on several National Quality Forum (NQF) and Agency for Healthcare Research and Quality (AHRQ) Steering Committees and Technical Panels. She is peer reviewer for several major medical journals including JAMA Internal Medicine, Annals of Internal Medicine, Annals of Family Medicine and CHEST. She has been an invited presenter at international conferences including the Guidelines International Network and EvidenceLive in Oxford. She has developed and delivered novel training programs and webinars for a wide variety of learners - from nursing and medical students to practicing clinicians and administrators.

Dr. Ireland is a developer of new methods in research synthesis. She is a co-developer of the DART tool used by medical specialty society and hospital and healthcare organizations to evaluate the quality of systematic reviews.

Dr Ireland brings innovation and expertise in evidence based methods to your organization. She's an experienced guide who can lead you on your best path to evidence based care improvement.

Liana Merz currently leads the Evidence-Based Care group at the BJC HealthCare Center for Clinical Excellence. Her previous roles include time as both Research Coordinator and Research Instructor at Washington University School of Medicine. She holds a doctorate and master’s degree from Saint Louis University and has previously published on various infection prevention and occupational health related topics.