Assessing the Evidence Series

By Gordon H. Guyatt, MD, MSc

and Jason W. Busse, DC, PhD

Risk of Bias: A Better Term than Alternatives

Authors in general, and authors of systematic reviews in particular, when addressing studies comparing alternative interventions, often refer to the "quality" of the studies. They may also refer to the "methodological quality," the "validity," or the "internal validity" (distinguished from "external validity" which is synonymous with generalizability or applicability) of the studies.

Each of these terms may refer to risk of bias: the likelihood that, because of reported flaws in design and execution of a study (we only ever know what was reported – not what was actually done), the results are at risk of a systematic deviation from the truth (i.e., overestimating or underestimating the true treatment effect). Risk of bias should be distinguished from random error, which does not have a direction and is best captured by the confidence interval around the best estimate of effect.

Each of these terms, however, may not refer (or not exclusively refer) to risk of bias. The GRADE group,1 for instance, uses “quality” to refer to confidence in the estimates of treatment effect.2 In this use of the term, quality refers not only to risk of bias, but also to issues such as precision, consistency, and directness of evidence. Some authors use the term "validity" to mean not only risk of bias, but also considerations of generalizability or applicability, and even precision. The term "risk of bias" is therefore preferable: it avoids the ambiguity of alternative terms.

Assessing Risk of Bias in Randomized Trials

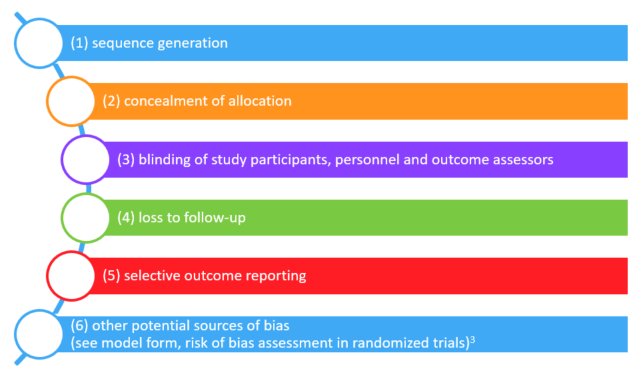

Several dozen systems for assessing risk of bias in randomized trials are available. The Cochrane Collaboration has brought some order to the resulting confusion by developing a "risk of bias" instrument that many would consider a gold standard.3 The Cochrane risk of bias tool identifies six possible sources of bias in randomized (or quasi-randomized) trials:

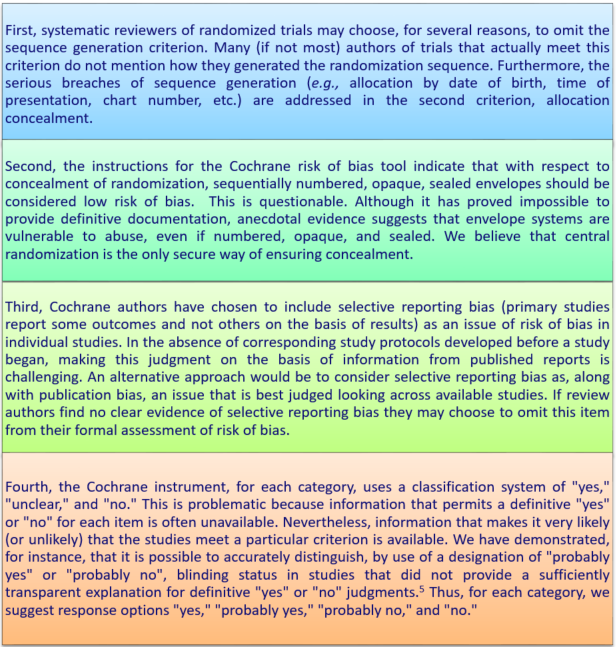

No system is perfect, and we have some reservations about the Cochrane risk of bias tool. These reservations are reflected in a revised risk of bias form that we have created.4

We have demonstrated the reproducibility of these response options for rating of blinding.5 Reviewer judgments are, however, likely to be reproducible only if detailed criteria for decisions are available. We have developed such criteria for blinding which are presented in an appendix.

Rating of Risk of Bias should be Outcome Specific

Traditionally, systematic review authors have provided a single rating of risk of bias for a particular study. This tradition is, unfortunately, both persistent and misguided. The reason it is misguided is that risk of bias can differ between outcomes. Consider, for instance, a surgical trial the outcomes of which include both quality of life and disease-specific mortality. Patients completing self-administered questionnaires may be unblinded, but adjudicators of cause-specific mortality may be blinded. Similarly, one might anticipate substantially greater loss to follow-up for quality of life than for all-cause mortality. Thus, it may well turn out that the quality of life outcome is associated with high risk of bias and the cause-specific mortality outcome is associated with a low risk of bias. A single rating of risk of bias for a trial is appropriate only if the risk of bias is identical for each of the relevant outcomes.

Risk of Bias Should Most Often be on a Component by Component Basis

Considerations exist that make a single rating of risk of bias for a particular study, and a particular outcome, appealing. Such a rating may facilitate decisions regarding rating down risk of bias across an entire body of evidence. Further, in explaining heterogeneity in results by risk of bias, if one repeats the analysis for each component, one risks false positive findings as a result of multiple testing.

On the other hand, the extent to which individual components impact overall risk of bias is very uncertain and likely to differ across circumstances, raising challenges in coming to a decision regarding overall risk of bias for a particular study and outcome. Further, empirical evidence suggests aggregated risk of bias scores can be misleading.6 In general, we avoid such aggregated scores.

References:

- Guyatt GH, Oxman AD, Vist GE, et al. GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ. 2008;336(7650):924-926.

- Guyatt GH, Oxman AD, Kunz R, Vist GE, Falck-Ytter Y, Schunemann HJ. What is "quality of evidence" and why is it important to clinicians? BMJ. 2008;336(7651):995-998.

- Higgins JP, Altman D. Assessing the risk of bias in included studies. In: Higgins J, Green S, eds. Cochrane Handbook for Systematic Reviews of Interventions 5.0.1. Chichester, U.K.: John Wiley & Sons; 2008.

- Guyatt G, Busse JW. Modification of Cochrane Tool to assess risk of bias in randomized trials.

- Akl E, Sun X, Busse J, et al. Specific instructions for estimating unclearly reported blinding status in randomized clinical trials were reliable and valid. J Clin Epidemiol. 2012;65(3):262-267.

- Dechartres A, Altman DG, Trinquart L, Boutron I, Ravaud P. Association between analytic strategy and estimates of treatment outcomes in meta-analyses. JAMA. 2014;312(6):623-630.

About the authors:

Professor Guyatt is a Distinguished Professor of Clinical Epidemiology and Biostatistics at McMaster University. He coined the term “evidence based medicine” (EBM) in 1991, and since then has been a leading advocate of evidence-based approaches to clinical decision-making. His over 1,000 publications have been cited over 80,000 times.

Jason Busse is an Assistant Professor in the Departments of Anesthesia and Clinical Epidemiology & Biostatistics at McMaster University. He has authored over 150 peer-reviewed publications with a focus on chronic pain, disability management, predictors of recovery, and methodological research.